configure-airflow-as-a-runtime.md 7.3 KB

Configuring Apache Airflow on Kubernetes for use with Elyra

Pipelines in Elyra can be run locally in JupyterLab, or remotely on Kubeflow Pipelines or Apache Airflow to take advantage of shared resources that speed up processing of compute intensive tasks.

Note: Support for Apache Airflow is experimental.

This document outlines how to set up a new Elyra-enabled Apache Airflow environment or add Elyra support to an existing deployment.

This guide assumes a general working knowledge of and administration of a Kubernetes cluster.

Prerequisites

- A private git repository on github.com, GitHub Enterprise, gitlab.com, or GitLab Enterprise that is used to store DAGs.

- S3-based cloud object storage e.g. IBM Cloud Object Storage, Amazon S3, MinIO

AND

- A Kubernetes Cluster without Apache Airflow installed

- Ensure Kubernetes is at least v1.18. Earlier versions might work but have not been tested.

- Helm v3.0 or later

- Use the Helm chart available in the Airflow source distribution with the Elyra sample configuration.

OR

- An existing Apache Airflow cluster

- Ensure Apache Airflow is at least v1.10.8 and below v2.0.0. Other versions might work but have not been tested.

- Apache Airflow is configured to use the Kubernetes Executor.

- Ensure the KubernetesPodOperator is installed and available in the Apache Airflow deployment

Setting up a DAG repository on Git

In order to use Apache Airflow with Elyra, it must be configured to use a Git repository to store DAGs.

- Create a private repository on github.com, GitHub Enterprise, gitlab.com, or GitLab Enterprise. (Elyra produces DAGs that contain credentials, which are not encrypted. Therefore you should not use a public repository.) Next, create a branch (e.g

main) in your repository. This will be referenced later for storing the DAGs. - Generate a personal access token with push access to the repository. This token is used by Elyra to upload DAGs.

- Generate an SSH key with read access for the repository. Apache Airflow uses a git-sync container to keep its collection of DAGs in synch with the content of the Git Repository and the SSH key is used to authenticate. Note: Make sure to generate the SSH key using RSA algorithm.

Take note of the following information:

- Git API endpoint (e.g.

https://api.github.comfor github.com orhttps://gitlab.comfor gitlab.com) - Repository name (e.g.

your-git-org/your-dag-repo) - Repository branch name (e.g.

main) - Personal access token (e.g.

4d79206e616d6520697320426f6e642e204a616d657320426f6e64)

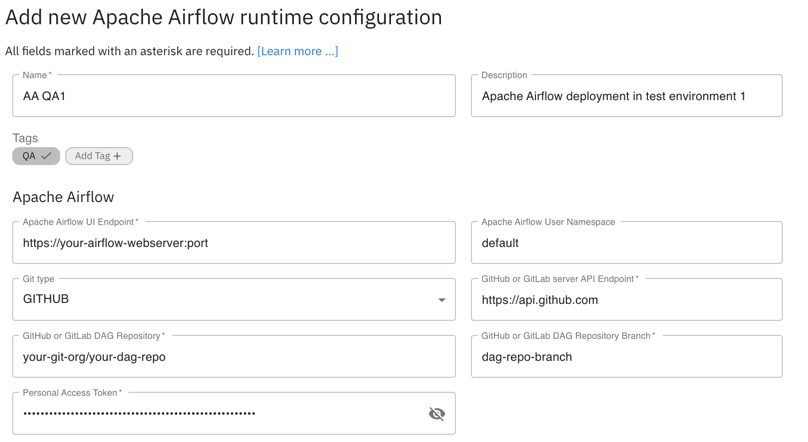

You need to provide this information in addition to your cloud object storage credentials when you create a runtime configuration in Elyra for the Apache Airflow deployment.

Deploying Airflow on a new Kubernetes cluster

To deploy Apache Airflow on a new Kubernetes cluster:

- Create a Kubernetes secret containing the SSH key that you created earlier.

The example below creates a secret named

airflow-secretfrom three files. Replace the secret name, file names and locations as appropriate for your environment.

kubectl create secret generic airflow-secret --from-file=id_rsa=.ssh/id_rsa --from-file=known_hosts=.ssh/known_hosts --from-file=id_rsa.pub=.ssh/id_rsa.pub -n airflow

- Download, review, and customize the sample

helmconfiguration (or customize an existing configuration). This sample configuration will use theKubernetesExecutorby default.- Set

git.urlto the URL of the private repository you created earlier, e.g.ssh://git@github.com/your-git-org/your-dag-repo. Note: Make sure your ssh URL contains only forward slashes. - Set

git.refto the DAG branch, e.g.mainyou created earlier. - Set

git.secretto the name of the secret you created, e.g.airflow-secret. - Adjust the

git.gitSync.refreshTimeas desired.

- Set

Example excerpt from a customized configuration:

## configs for the DAG git repository & sync container

##

git:

## url of the git repository

##

## EXAMPLE: (HTTP)

## url: "https://github.com/torvalds/linux.git"

##

## EXAMPLE: (SSH)

## url: "ssh://git@github.com:torvalds/linux.git"

##

url: "ssh://git@github.com/your-git-org/your-dag-repo"

## the branch/tag/sha1 which we clone

##

ref: "main"

## the name of a pre-created secret containing files for ~/.ssh/

##

## NOTE:

## - this is ONLY RELEVANT for SSH git repos

## - the secret commonly includes files: id_rsa, id_rsa.pub, known_hosts

## - known_hosts is NOT NEEDED if `git.sshKeyscan` is true

##

secret: "airflow-secret"

...

gitSync:

...

refreshTime: 10

airflow:

## configs for the docker image of the web/scheduler/worker

##

image:

repository: elyra/airflow

The container image is created using this Dockerfile and published on Docker Hub and quay.io.

- Install Apache Airflow using the customized configuration.

helm install "airflow" stable/airflow --values path/to/your_customized_helm_values.yaml

Once Apache Airflow is deployed you are ready to create and run pipelines, as described in the tutorial.

Enabling Elyra pipelines in an existing Apache Airflow deployment

To enable running of notebook pipelines on an existing Apache Airflow deployment

- Enable Git as DAG storage by customizing the Git settings in

airflow.cfg.

Once Apache Airflow is deployed you are ready to create and run pipelines, as described in the tutorial.