best-practices-custom-pipeline-components.md 10 KB

Requirements and best practices for custom pipeline components

Components are the fundamental building blocks of pipelines in Elyra. This document outlines requirements that user-provided custom components must meet to be compatible with the Visual Pipeline Editor. Best practices for generic components are documented in the Best practices for file-based pipeline nodes topic.

Kubeflow Pipelines components

Requirements

- The component is implemented as described here.

- Python function-based components are not supported.

- The component specification must be accessible to the Visual Pipeline Editor and can be stored locally or remotely. Refer to the Managing pipeline components topic for details.

- If Kubeflow Pipelines is configured with Argo as workflow engine and emissary executor as workflow executor, the component specifiction must meet the stated requirement. If Elyra detects a component that does not meet the requirement, a warning is logged.

Best practices

This documentation content is currently under development.

Apache Airflow components

Requirements

Configure fully qualified package names for custom operator classes

For Apache Airflow operators imported into Elyra using URL, filesystem, or directory-based component catalogs, Elyra must be configured to include information on the fully qualified package names for each custom operator class. This configuration is what makes it possible for Elyra to correctly render the import statements for each operator node in a given DAG.

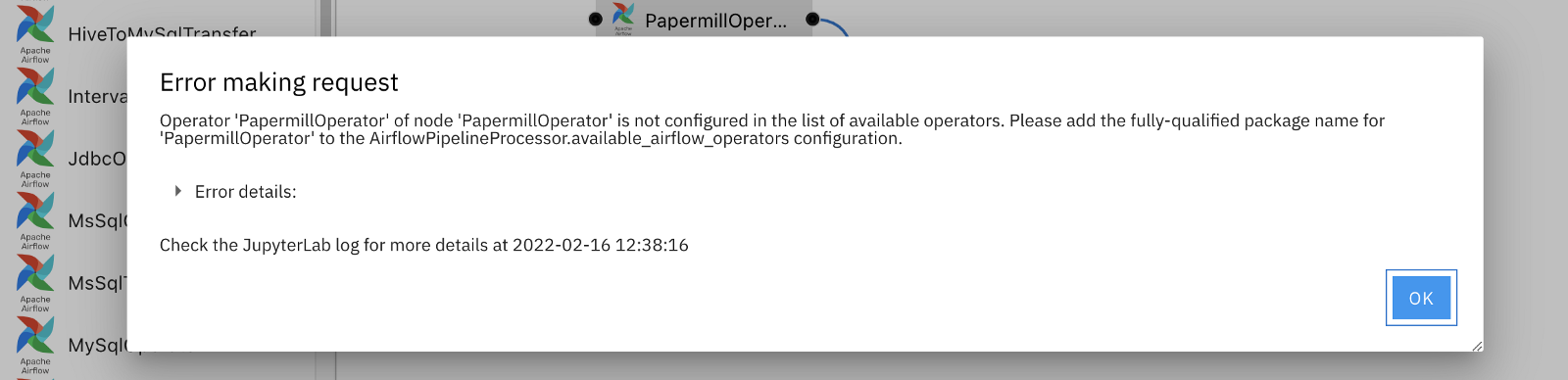

If you do not correctly configure an operator package name and try to export or submit a pipeline with custom components, Elyra will give you an error message similar to the following:

As seen above, the operators' fully qualified package names must be added to the available_airflow_operators

variable. This variable has a list value and is a

configurable trait

in Elyra. To configure available_airflow_operators, first create a configuration file from the command line (if

you do not already have one):

$ jupyter elyra --generate-config

Open the configuration file (a Python file) and find the PipelineProcessor(LoggingConfigurable) header. Using

c.AirflowPipelineProcessor.available_airflow_operators as the variable name, modify the variable as needed

using Python list manipulation methods such as append, extend, or overwrite all existing values using an

assignment.

For example, if you want to use the SlackAPIPostOperator from the Slack provider package and the PapermillOperator

from the core package in your pipelines, your configuration will look like this:

...

#------------------------------------------------------------------------------

# PipelineProcessor(LoggingConfigurable) configuration

#------------------------------------------------------------------------------

c.AirflowPipelineProcessor.available_airflow_operators.extend(

[

"airflow.providers.slack.operators.SlackAPIPostOperator",

"airflow.operators.papermill_operator.PapermillOperator"

]

)

...

There is no need to restart JupyterLab in order for these changes to be picked up. You can now successfully export or submit a pipeline with these custom components.

Best practices

This documentation content is currently under development.

Troubleshooting missing pipeline components

Pipeline files include references to components, but not the component definitions. If you open a pipeline in the Visual Pipeline Editor, Elyra tries to match these component references with it's local component inventory. If a component reference cannot be resolved, an error message is displayed providing information you can use to locate the component.

The error message includes a key that identifies the catalog type that made the component available in the environment where the pipeline was created. Each catalog type has its own set of keys that are used to resolve a component reference.

Filesystem catalog (type: local-file-catalog)

The filesystem component catalog provides access to components that are stored in the local filesystem. In this context local refers to the environment where JupyterLab is running.

- Take note of the displayed

base_dirandpath. (base_dirmight be empty) In the environment where the pipeline was created the file (path's value) was stored in thebase_dirdirectory. - Obtain a copy of the file and store it in the local file system, following the best practices recommendation.

- Add a new filesystem component catalog, providing the appropriate values as input.

Directory catalog (type: local-directory-catalog)

The directory component catalog provides access to components that are stored in the local filesystem. In this context local refers to the environment where JupyterLab is running.

- Take note of the displayed

base_dirandpath. In the environment where the pipeline was created the file (path's value) was stored in thebase_dirdirectory. - Obtain a copy of the file and store it in any directory in the local file system that JupyterLab has access to.

- Add a new directory component catalog, providing the local directory name as input.

URL catalog (type: url-catalog)

The URL component catalog provides access to components that are stored on the web.

- Take note of the displayed

url. - Add a new URL component catalog, providing the URL as input.

Apache Airflow package catalog (type: airflow-package-catalog)

The Apache Airflow package catalog provides access to Apache Airflow operators that are stored in built distributions.

- Take note of the displayed

airflow_package, which identifies the Apache Airflow built distribution that includes the missing operator. Add a new Apache Airflow package catalog, providing the download URL for the listed distribution as input. For example, if the value of

airflow_packageisapache_airflow-1.10.15-py2.py3-none-any.whl, specify as URLhttps://files.pythonhosted.org/packages/f0/3a/f5ce74b2bdbbe59c925bb3398ec0781b66a64b8a23e2f6adc7ab9f1005d9/apache_airflow-1.10.15-py2.py3-none-any.whl

Apache Airflow provider package catalog (type: airflow-provider-package-catalog)

The Apache Airflow provider package catalog provides access to Apache Airflow operators that are stored in Apache Airflow provider packages.

- Take note of the displayed

provider_package, which identifies the Apache Airflow provider package that includes the missing operator. Add a new Apache Airflow provider package catalog, providing the download URL for the listed package as input. For example, if the value of

provider_packageisapache_airflow_providers_http-2.0.2-py3-none-any.whl, specify as URLhttps://files.pythonhosted.org/packages/a1/08/91653e9f394cbefe356ac07db809be7e69cc89b094379ad91d6cef3d2bc9/apache_airflow_providers_http-2.0.2-py3-none-any.whl

Kubeflow Pipelines example components catalog (type: elyra-kfp-examples-catalog)

The missing component definition is stored in the Kubeflow Pipelines example components catalog. Refer to the documentation for details on how to install and enable connector.

Apache Airflow example components catalog (type: elyra-airflow-examples-catalog)

The missing component definition is stored in the Apache Airflow example components catalog. Refer to the documentation for details on how to install and enable connector.

Machine Learning Exchange catalog (type: mlx-catalog)

The missing component definition is stored in a Machine Learning Exchange deployment.

- Contact the user who created the pipeline to request deployment connectivity details.

- Install and configure the connector as outlined in the connector documentation.

Component catalogs not listed here

Check the component catalog connector directory if the referenced catalog type is not listed here.